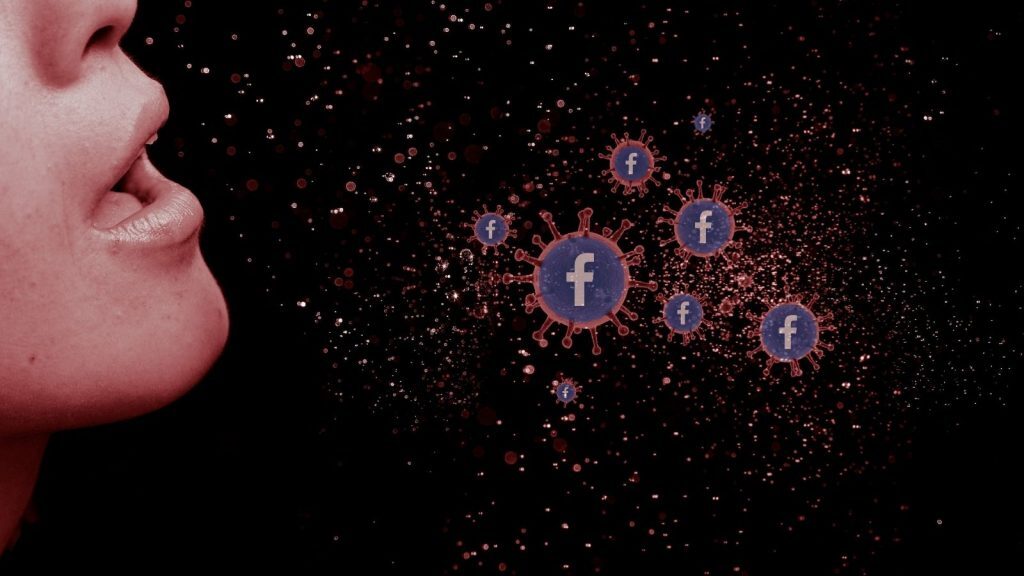

Vaccine misinformation is a problem that Facebook has had to tackle for months now. It hasn’t helped that reports have turned up claiming that just twelve accounts are responsible for almost two-thirds of misinformation online at the moment.

The social network disputes this view but said in its most recent update on the state of viral misinformation on its platform that it has “…removed over three dozen Pages, groups and Facebook or Instagram accounts linked to these 12 people, including at least one linked to each of the 12 people, for violating our policies.”

Facebook does some vaccinating of its own

Other action was taken against pages, groups, accounts and websites linked to those identified as spreading COVID misinformation, including downranking their posts or ceasing recommending them to other Facebook users. Unspecified website domains linked to the accounts in question have also seen all posts containing links to their content de-prioritised in the News Feed.

Zuckerburg’s company is at pains to point out that these twelve accounts, and their associated hangers-on, “…are in no way representative of the hundreds of millions of posts that people have shared about COVID-19 vaccines in the past months on Facebook.”

So far, the company claims to have removed more than 3,000 pages, accounts or groups and deleted more than 20 million pieces of content for breaking its rules about posting COVID-19 and vaccine misinformation since the pandemic broke out. It also admits that it’s got a ways to go.