Most women and (we’re sure) some men on the internet have horror stories about the vast quantities of dong that parades through their private messages. Instagram, and its parent company Meta, are working on a tool that’ll prevent quite so many er… tools from turning up on the platform.

We’re not sure what it is about the male member (pun intended) of the species. Put some of them in the vicinity of a camera and they think that sharing grainy pictures of their schlong with everyone is okay. It isn’t. But that doesn’t seem to have stopped the sausage parade.

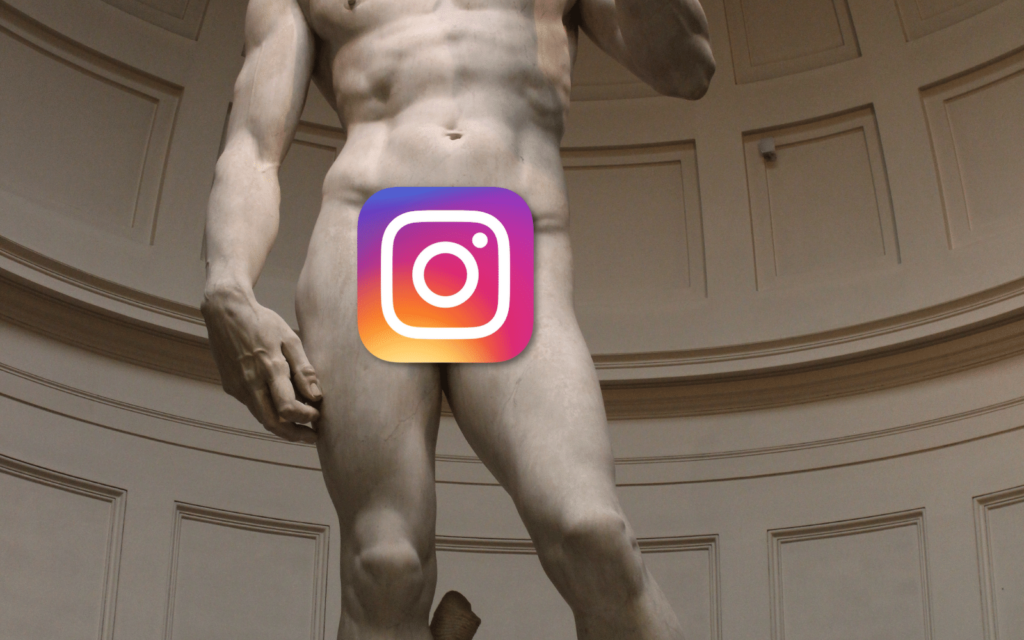

“Don’t be a dick.” – Instagram, probably

#Instagram is working on nudity protection for chats 👀

ℹ️ Technology on your device covers photos that may contain nudity in chats. Instagram CAN’T access photos. pic.twitter.com/iA4wO89DFd

— Alessandro Paluzzi (@alex193a) September 19, 2022

Instagram is working on a way to prevent unwanted nudes from showing up on the platform. It’s still early days, according to a statement sent to The Verge, but the days of drive-by dipstick distribution may be done.

The feature (and it absolutely is a feature) involves building a nudity detection system that operates on-device. This means Instagram doesn’t have access to private images sent on the platform. Meta seems hyper-aware of privacy at the moment. “We’re working closely with experts to ensure these new features preserve people’s privacy, while giving them control over the messages they receive,” said a Meta spokesperson.

Read More: Teen Instagram users will automatically have access to sensitive content restricted

But the early version of the function is a bit of a double-edged sword. When enabled, it’ll conceal images that contain nudity. It’s possible to see the image, but you’ll have to choose to do so. Or you could turn off the feature entirely, facing the internet completely unprotected. Which is how things work right now.

Instagram’s genital concealment system still has a way to go before it merges with this reality. The company said that it would have more to share once it goes into real-world testing. We’d expect that it won’t work perfectly. Image recognition isn’t an exact science. It’s possible to mistake one thing (an unwelcome johnson) for another thing (an unwelcome Johnson) from the right angle.