We already know Apple is working on a way to compress Apple Intelligence inside a little metal box that’ll cling to your chest. Then there are rumours that the Fruit Company will stick a couple of IR sensors down the stem of an AirPod to help them see the world. The plans don’t stop there, according to Bloomberg’s Mark Gurman.

Rose-gold-tinted glasses

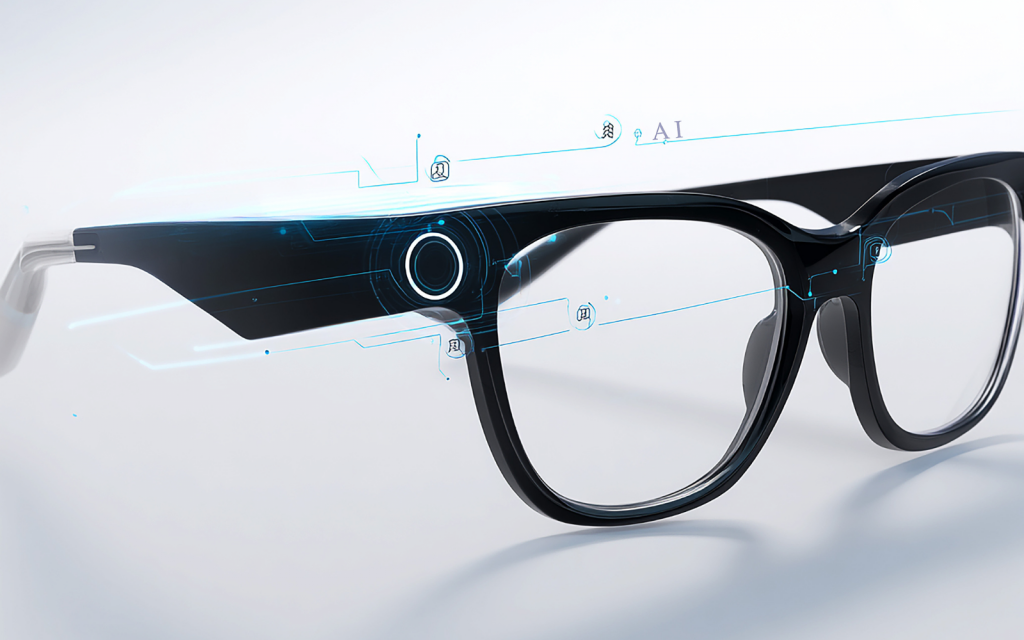

In Apple’s pursuit to flesh out its wearables range — an area that Google, Meta and even OpenAI are hounding — it’s reportedly “accelerating development” on three of its wearables. We’ve already mentioned the first two, while the third will take the form of proper smart glasses. Nope, it’s not another Vision Pro. That was the word on the block as recently as October last year, but now it seems Apple is taking it seriously.

All three devices will reportedly hook up to their user’s iPhone (not that we see anyone outside the walled garden gunning for one of these) to function. Apple’s engineering team will design them with the upgraded Siri assistant at the forefront, which will rely on their optics to see the world and carry out tasks.

Read More: Apple pencils in 4 March showcase for next-gen chips and budget devices

Apple is seemingly funnelling most of the budget into building a decent pair of smart glasses. “The AirPods and pendant are envisioned as simpler offerings, equipped with lower-resolution cameras designed to help the AI work rather than for taking photos or videos,” Gurman said, confirming that the glasses would be more “upscale”.

Before Apple can fully penetrate the wearables market, it’ll need a sturdier version of Siri to lean on. Google is lending a hand to make that happen (for a whole lot of money, we should add), which will allow the Fruit Company to catch up to the big players in AI. Gurman reckons the improved Siri might arrive as soon as iOS 27, which the company will likely debut at its next WWDC showcase in the middle of the year.