Nowhere is the advance of technology more evident than in the rise of robots and artificial intelligence. From smart devices to self-checkout lanes to Netflix recommendations, robots (the hardware) and AI (the software) are everywhere inside the technology of modern society. They’re increasingly common in ads, too: During the 2019 Super Bowl alone, seven ads aired featuring either robots or AI.

Since I began studying human-robot interactions almost a decade ago, I’ve observed that in most ads, robots typically fall into one of three general categories: scary, sad or stupid. All three perpetuate common misconceptions about technologies that are already beginning to play a pivotal role in people’s lives.

The fear factor

“Scary robot” ads are inevitable, given the popularity of the sinister robot trope. Advertisers, like Hollywood, embrace scary robot narratives because they’re more dramatic than ones in which robots and humans get along.

“Fear is Everywhere,” a paranoia-inducing 2019 commercial, advertises SimpliSafe home security systems, which use some of the same monitoring technology the ad demonizes. Rather than reminding viewers of their concerns about burglars or basement flooding, the ad highlights robots and AI as the omnipresent danger. A woman in an electronics store asks her friend if he’s listening, and a creepy computer voice issues forth from a speaker: “Always, Denise.”

That same ad also highlights a second major type of fear – that robots will replace humans. A man watching a sporting event tells his friends, “in five years, robots will be able to do your job, and your job and your job,” while a robot sitting in the stands listens menacingly, as if affirming the assertion.

Then, of course, there’s the third trope, of the evil robot intent on harming people. A 2017 Halo Top ice cream ad, for example, functions as a 90-second horror movie, in which a robot force-feeds a woman ice cream, and then casually mentions that everyone she knows is dead.

There are real threats to humans from robots and AI. Automation may eliminate millions of jobs – and it might create many others that don’t yet exist. Most likely, both will happen, as has happened throughout history: Elevator operators disappeared and social-media manager positions were created. The threat revolves around who will and who won’t be able to adjust or receive training to get the new jobs.

But the world is a long way off from robots that portray a version of the “Frankenstein Complex,” Isaac Asimov’s phrase for the human fear that poorly designed mechanical creations might turn against humanity. Robots have no intentions – only instructions. They can act as though they have feelings, but experience no actual emotion. No one knows if robot emotion or sentience are even possible.

Ads that instill fear of technology in humans can present an unrealistic and unhelpful mindset for adapting to the increasing presence of this technology in our lives – whether in criminal justice, health care or other areas. Fear can also distract people from properly understanding and planning for ways in which humans can continue to offer meaningful skills and insights beyond the abilities of any machine.

Doom and gloom

“Sad robot” ads combat people’s fears about robots while simultaneously eliciting sympathy for them. In a 2019 Pringles ad, a smart device bemoans its lack of hands to stack chips or mouth to eat them. The robot’s physical limitations reassure viewers of human superiority, and yet the robot is advanced enough to have genuine feelings of sadness.

Turbo Tax’s RoboChild perpetuated the myth of robot intelligence in two appearances during the 2019 Super Bowl. RoboChild, which looks like young Haley Joel Osment’s face stuck on a small robot body, wants to be an accountant, but encounters constant reminders that it’s in a human world. A person tells RoboChild it isn’t emotionally complex enough for the job, correctly distinguishing between the human and robot abilities to feel emotion – while sparking viewers’ sympathy for the robot.

However, emotion isn’t necessary to fulfill most accounting functions: Artificial intelligence already performs a number of financial tasks, many of which require human interaction.

Falling to pieces

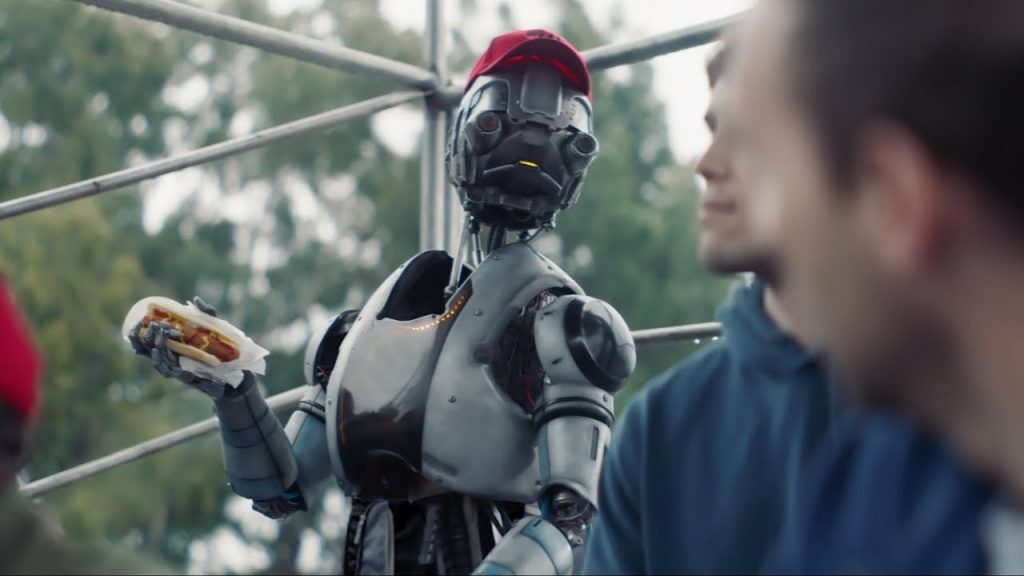

The third category of advertising robots doesn’t evoke fear or sympathy, but rather ridicule. A 2018 State Farm ad, for instance, pokes fun at a rival agency that has begun using cheap robot agents instead of human ones. The employee robot is a mess, spurting both hydraulic fluid and gibberish. In “stupid robot” ads, robots have cognitive constraints, sometimes in addition to physical ones.

These ads are at least somewhat realistic, as robots and AI have fundamental limitations – even the system that can beat an international Go champion isn’t much good at anything else. Even so, portraying robots as a collection of laughable, malfunctioning parts undermines the seriousness of their implications. Humans who are laughing at dumb machines may not think clearly or prepare actively for a future in which even limited robots and AI are key players.

Amazon’s Super Bowl ad featuring Alexa fails initially seemed like a collection of “stupid robot” highlights. A collar that allows a dog to order an entire truckload of food reminds viewers of Alexas that interpreted TV news or casual conversations as directives to buy products.

It rightly makes the point that no product is perfect – but it subtly demonstrates the power of Amazon’s technologies, which in the ad shut down an entire continental power grid by accident. The technology itself is portrayed as dysfunctional – and something over which we can all have a laugh. However, the failures illustrate that the flaws lie in human efforts of concept, design or programming. Laughing at the machines can distract people from that deeper insight, or from considering who should be responsible when automation-enabled disaster strikes.

Commercials aren’t likely to encourage viewers to seek out legitimate information about new technologies. Their main job is to sell a product or service, not contribute to an informed society. But they need not perpetuate generalized and unrealistic fears. The more misdirection people absorb about robots and AI, the less capable they will be of understanding and managing the real implications of technological advances.

- is Lecturer of Rhetoric, Boston University

- This article first appeared on The Conversation