As energy use rises and the planet warms, you might have dreamed of an energy source that works 24/7, rain or shine, quietly powering homes, industries and even entire cities without the ups and downs of solar or wind – and with little contribution to climate change. The promise of new engineering techniques for geothermal energy – heat from the Earth itself – has attracted rising levels of investment to this reliable, low-emission power source that can provide continuous electricity almost anywhere on the planet. That includes ways to harness geothermal energy from idle or abandoned oil and gas wells. In the first…

Author: The Conversation

A proposed constellation of satellites has astronomers very worried. Unlike satellites that reflect sunlight and produce light pollution as an unfortunate byproduct, the ones by US startup Reflect Orbital would produce light pollution by design. The company promises to produce “sunlight on demand” with mirrors that beam sunlight down to Earth so solar farms can operate after sunset. It plans to start with an 18-metre test satellite named Earendil-1 which the company has applied to launch in 2026. It would eventually be followed by about 4,000 satellites in orbit by 2030, according to the latest reports. So, how bad would the light pollution be? And perhaps…

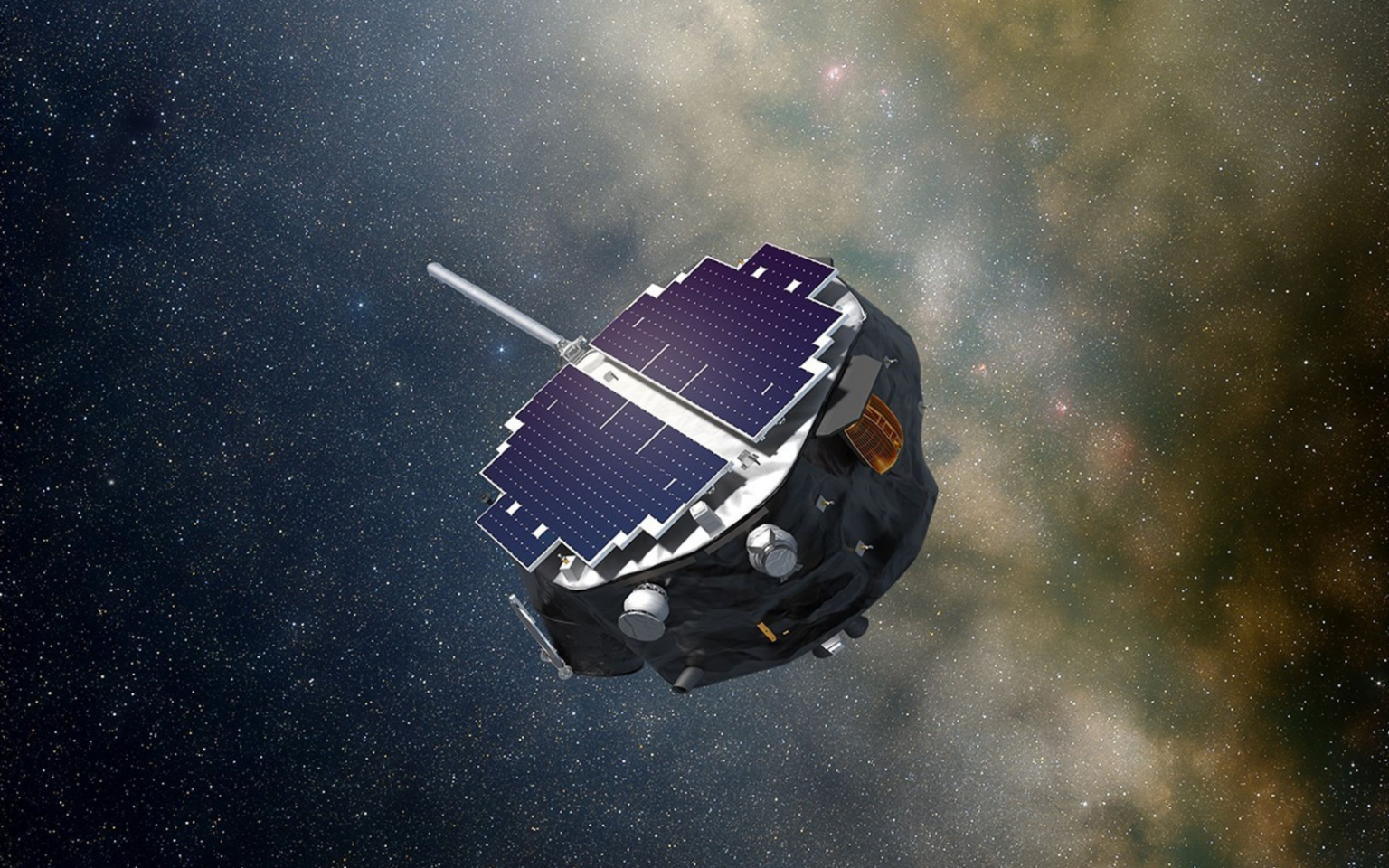

Even at a distance of 150 million kilometres away, activity on the Sun can have adverse effects on technological systems on Earth. Solar flares – intense bursts of energy in the Sun’s atmosphere – and coronal mass ejections – eruptions of plasma from the Sun – can affect the communications, satellite navigation and power grid systems that keep society functioning. On Sept. 24, 2025, NASA launched two new missions to study the influence of the Sun on the solar system, with further missions scheduled for 2026 and beyond. I’m an astrophysicist who researches the Sun, which makes me a solar physicist. Solar physics is part of the wider field of…

Some of the world’s biggest tech firms have soared in value over the last year. As AI evolves at pace, there are hopes that it will improve lives in ways that people could never have imagined a decade ago – in sectors as diverse as healthcare, employment and scientific discovery. OpenAI is now worth US$500 billion (£373 billion), compared with US$157 billion last October. Another firm, Anthropic, has almost trebled its valuation. But the Bank of England has now warned of a possible rapid “correction” due to its concerns about these staggering valuation rises. The question is whether these values are realistic – or based on…

OpenAI’s recent rollout of its new video generator Sora 2 marks a watershed moment in AI. Its ability to generate minutes of hyper-realistic footage from a few lines of text is astonishing, and has raised immediate concerns about truth in politics and journalism. But Sora 2 is rolling out slowly because of its enormous computational demands, which point to an equally pressing question about generative AI itself: What are its true environmental costs? Will video generation make them much worse? The recent launch of the Stargate Project — a US$500 billion joint venture between OpenAI, Oracle, SoftBank and MGX — to build massive AI…

A US judge recently decided not to break up Google, despite a ruling last year that the company held a monopoly in the online search market. Between Google, Microsoft, Apple, Amazon and Meta, there are more than 45 ongoing antitrust investigations in the EU (the majority under the new EU Digital Markets Act) and in the US. While the outcome could have been much worse for Google, other rulings and investigations have the potential to cut to the heart of how the big tech companies make money. As such, these antitrust cases can drive real change around how the tech giants…

The frequency and length of daily smartphone use continues to rise, especially among young people. It’s a global concern, driving recent decisions to ban phones in schools in Canada, the United States and elsewhere. Social media, gaming, streaming and interacting with AI chatbots all contribute to this pull on our attention. But we need to look at the phones themselves to get the bigger picture. As I argue in my newly published book, Needy Media: How Tech Gets Personal, our phones — and more recently, our watches — have become animated beings in our lives. These devices can build bonds with us by recognising our presence and reacting…

The electrification boom of the 1920s set the United States up for a century of industrial dominance and powered a global economic revolution. But before electricity faded from a red-hot tech sector into invisible infrastructure, the world went through profound social change, a speculative bubble, a stock market crash, mass unemployment and a decade of global turmoil. Understanding this history matters now. Artificial intelligence (AI) is a similar general purpose technology and looks set to reshape every aspect of the economy. But it’s already showing some of the hallmarks of electricity’s rise, peak and bust in the decade known as the Roaring Twenties. The reckoning that followed could be…

When we post to a group chat or talk to an AI chatbot, we don’t think about how these technologies came to be. We take it for granted we can instantly communicate. We only notice the importance and reach of these systems when they’re not accessible. Companies describe these systems with metaphors such as the “cloud” or “artificial intelligence”, suggesting something intangible. But they are deeply material. The stories told about these systems centre on newness and progress. But these myths obscure the human and environmental cost of making them possible. AI and modern communication systems rely on huge data…

There are many claims to sort through in the current era of ubiquitous artificial intelligence (AI) products, especially generative AI ones based on large language models or LLMs, such as ChatGPT, Copilot, Gemini and many, many others. AI will change the world. AI will bring “astounding triumphs”. AI is overhyped, and the bubble is about to burst. AI will soon surpass human capabilities, and this “superintelligent” AI will kill us all. If that last statement made you sit up and take notice, you’re not alone. The “godfather of AI”, computer scientist and Nobel laureate Geoffrey Hinton, has said there’s a 10–20% chance AI will lead…