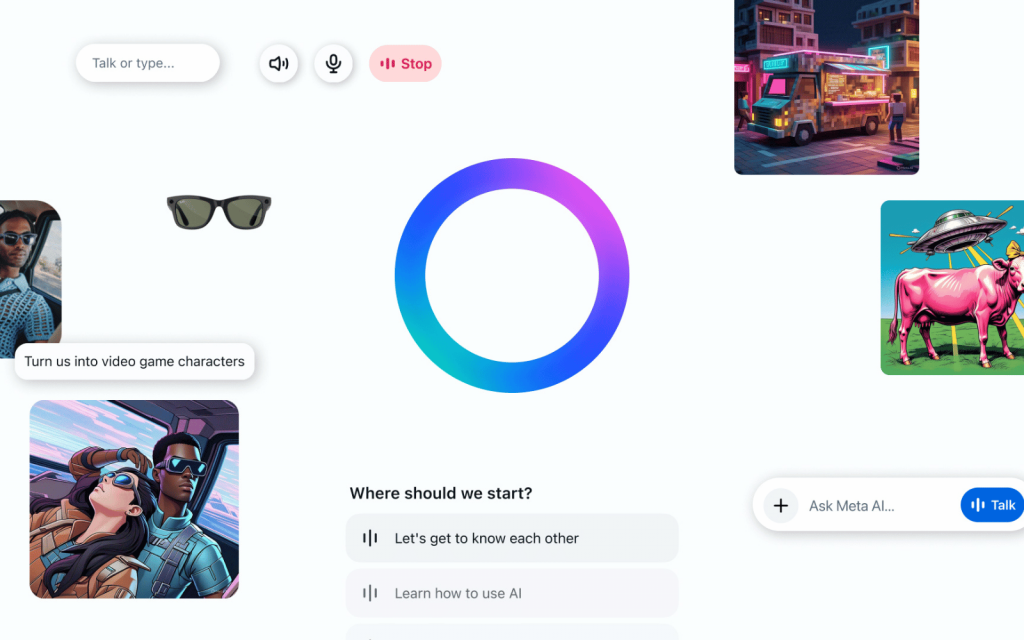

If you were thinking about getting a pair of Meta smart glasses, you may want to consider that your chats are going to be used to serve you ads. The company is rolling out the feature to its one billion user base on all its platforms and devices, and while it claims this is only for ads and content, this opens up avenues for even more insidious forms of privacy encroachment down the line.

Meta is watching you

The company says it’ll add user interactions (both voice and text) with its AI products to its existing user data, including likes and follows, so it can shape your ad and content recommendations. The example Meta gives is of a user talking to its AI chatbot about hiking, which leads to the user seeing ads about hiking boots, local hiking groups to join, or friends’ trail updates.

Meta’s privacy policy manager Christy Harris says, “People’s interactions simply are going to be another piece of the input that will inform the personalization of feeds and ads. We’re still in the process of building the first offerings that will make use of this data.” The social media company also assures that conversations about sensitive topics such as religion, sexual orientation, political views, or ethnicity will not be included in the ad training data.

From 7 October 2025, Meta will send out in-product notifications and emails about the change, which will take place from 16 December 2025. The feature will roll out all across the world except in the UK, the EU, and South Korea.

Considering Meta’s growing dominance in the wearable tech industry, this change presents a rather dystopian sort of threat to user privacy. It’s not a stretch to imagine what other kinds of personal data Meta will try to monetise next — the Fifteen Million Merits episode of Black Mirror comes to mind. Maybe the EU’s strict user privacy laws wouldn’t be so bad.