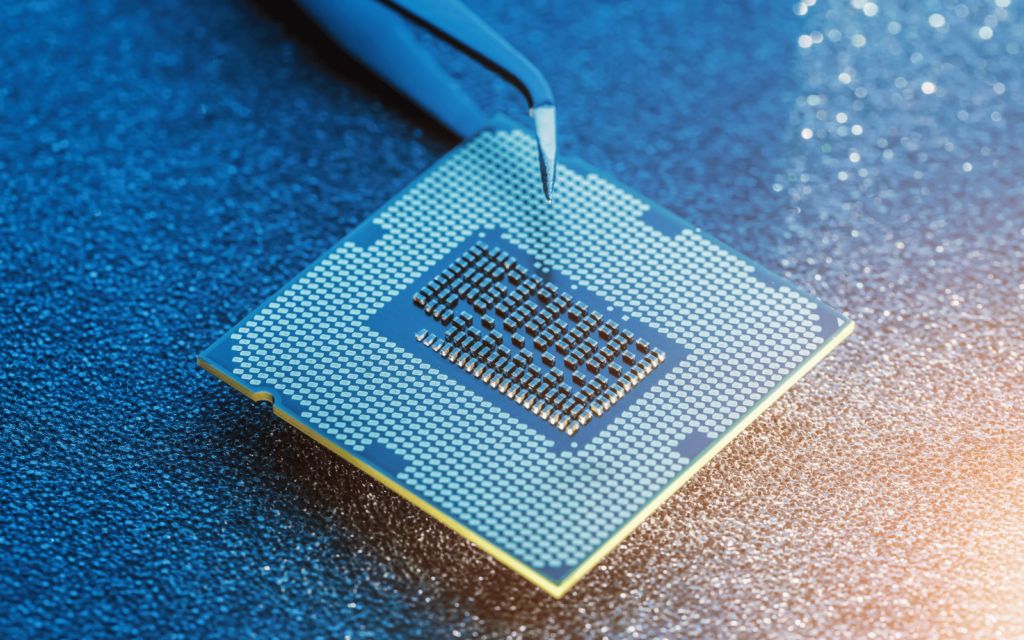

What is now known as Moore’s law began when Intel co-founder Gordon Moore wrote a 1965 article for the 35th-anniversary issue of Electronics magazine. It was titled “Cramming more components onto integrated circuits”, and in it, Moore, then the director of research & development at Fairchild Semiconductor, stated his now famous prediction about the semiconductor industry.

“The complexity for minimum component costs has increased at a rate of roughly a factor of two per year. Certainly over the short term this rate can be expected to continue, if not to increase,” he wrote.

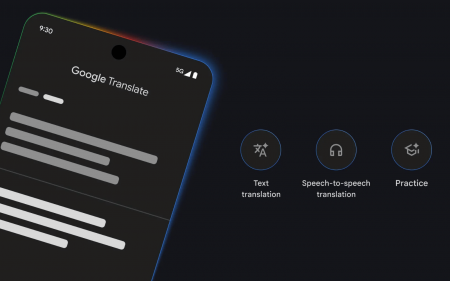

It wasn’t until Caltech professor Carver Mead popularised the term “Moore’s law” in 1975 that it gained its status as the unofficial projection of the growth trajectory of these essential components of the computer revolution that now power everything from smartphones to cars. Along the way, the corollary “law” was added that while processors’ capacity doubles every two years, they also halve in cost.

At some point, “every two years” was changed to “every 18 months”, which Moore said he never said, but which has proved to be correct. Moore, a pioneer in so many ways, died in March, aged 94.

In a 2005 Economist article to celebrate his law’s 40th anniversary, Moore said: “It turned out to be much more accurate than it had any reason to be.” But, he added, he couldn’t bring himself to use the expression “Moore’s law” for 20 years.

Moore’s message was effective because, as The Economist wrote, “the cost of computation, and all electronics, appeared certain to plummet, and still does today, thus allowing all sorts of other progress. And, indeed, for four decades, Moore’s law has served as shorthand for the rise of Silicon Valley, the boom in PCs (which surprised even Moore, who had forgotten that he had predicted home computers), the dot-com boom, the information superhighway, and other exciting things.”

When Moore was asked about his original article, he said in a 2015 interview: “I just did a wild extrapolation, saying it’s going to continue to double every year for the next 10 years.”

Make that “for half a century” of consistent growth, and it is now a metric that can be seen in many other technology-heavy industries — including photovoltaic solar panels that are used to capture the sun’s energy and convert it to electricity.

Moore may have joked about his figures being a “wild extrapolation” but it was made by a man immersed in his industry, applying the very human trait of an intuitive guess. For all the current excitement about artificial intelligence (AI) through new large language models (LLMs), it’s worth remembering what it is that truly sets us apart with our human intelligence. Such intuitive cognitive leaps are something computers and AI will take a long time to master.

Just shy of its 60th anniversary, Moore’s law is still driving an industry in which microprocessors are counted “to the closest million”, as one Intel executive joked at a Pentium launch many, many years ago. As Moore himself joked in that Economist article: “Moore’s law is a violation of Murphy’s law [‘anything that can go wrong will go wrong’]. Everything gets better and better.”

- This article first appeared in the Financial Mail.