That’s what tech entrepreneur Elon Musk suggested in a recent presentation of the Neuralink device, an innovative brain-machine interface implanted in a pig called Gertrude. But how feasible is his vision? When I raised some brief reservations about the science, Musk dismissed them in a tweet saying: “It is unfortunately common for many in academia to overweight the value of ideas and underweight bringing them to fruition. The idea of going to the moon is trivial, but going to the moon is hard.”

Brain-machine interfaces use electrodes to translate neuronal information into commands capable of controlling external systems such as a computer or robotic arm. I understand the work involved in building one. In 2005, I helped develop Neurochips, which recorded brain signals, known as action potentials, from single cells for days at a time and could even send electrical pulses back into the skull of an animal. We were using them to create artificial connections between brain areas and produce lasting changes in brain networks.

Unique brains

Neuroscientists have, in fact, been listening to brain cells in awake animals since the 1950s. At the turn of the 21st century, brain signals from monkeys were used to control an artificial arm. And in 2006, the BrainGate team began implanting arrays of 100 electrodes in the brains of paralysed people, enabling basic control of computer cursors and assistive devices.

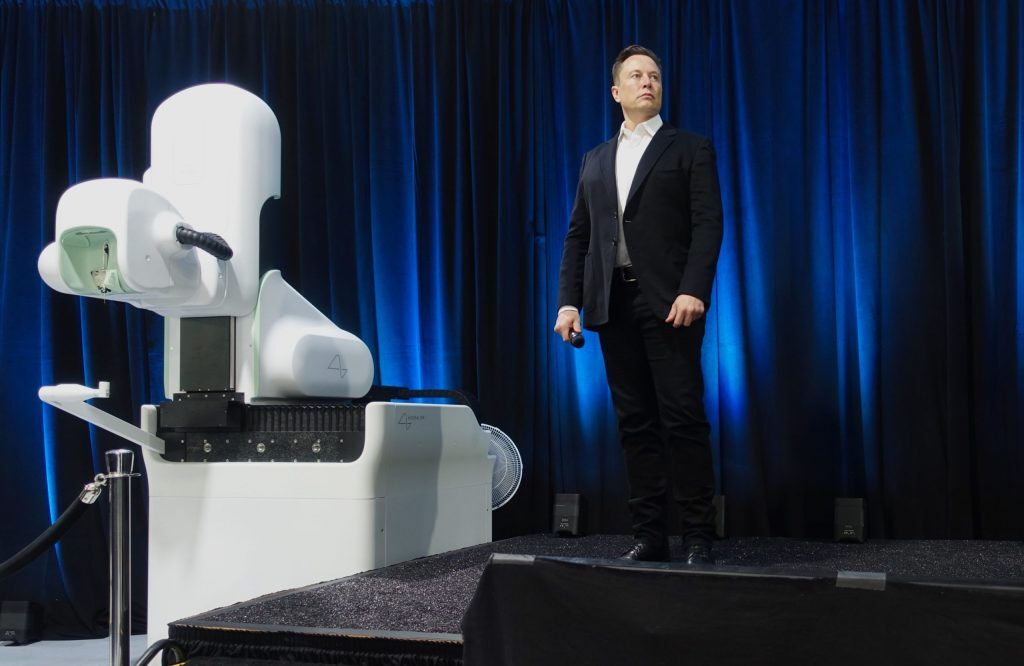

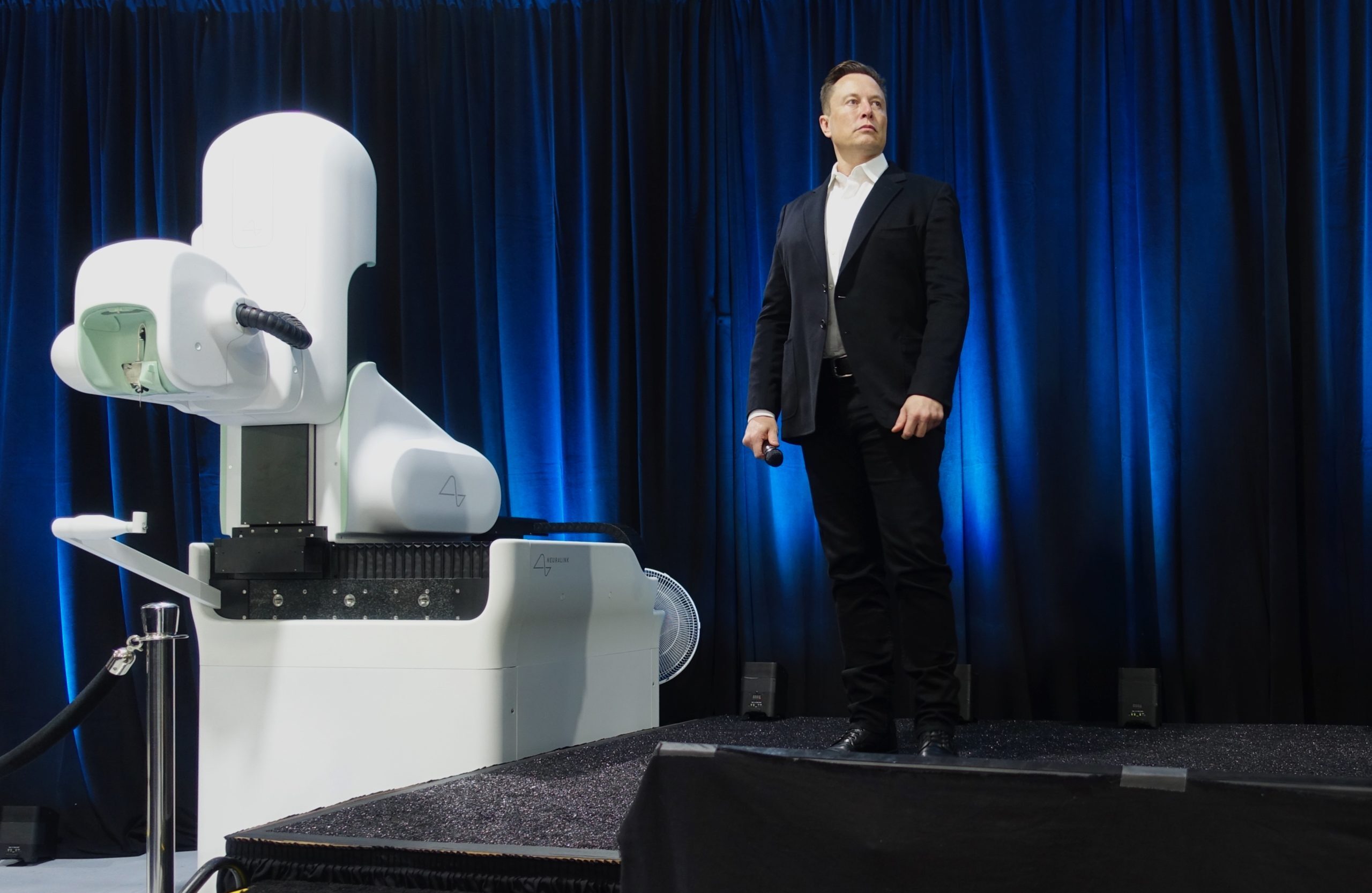

I say this not to diminish the progress made by the Neuralink team. They have built a device to relay signals wirelessly from 1,024 electrodes implanted into Gertrude’s brain by a sophisticated robot. The team is making rapid progress towards a human trial, and I believe their work could improve the performance of brain-controlled devices for people living with disabilities.

But Musk has more ambitious goals, hoping to read and write thoughts and memories, enable telepathic communication and ultimately merge human and artificial intelligence (AI). This is certainly not “trivial”, and I don’t think the barriers can be overcome by technology alone.

Today, most brain-machine interfaces use an approach called “biomimetic” decoding. First, brain activity is recorded while the user imagines various actions such as moving their arm left or right. Once we know which brain cells prefer different directions, we can “decode” subsequent movements by tallying their action potentials like votes.

This approach works adequately for simple movements, but can it ever generalise to more complex mental processes? Even if Neuralink could sample enough of the 100 billion cells in my brain, how many different thoughts would I first have to think to calibrate a useful mind-reading device, and how long would that take? Does my brain activity even sound the same each time I think the same thought? And when I think of, say, going to the Moon, does my brain sound anything like Musk’s?

Some researchers hope that AI can sidestep these problems, in the same way it has helped computers to understand speech. Perhaps given enough data, AI could learn to understand the signals from anyone’s brain. However, unlike thoughts, language evolved for communication with others, so different speakers share common rules such as grammar and syntax.

While the large-scale anatomy of different brains is similar, at the level of individual brain cells, we are all unique. Recently, neuroscientists have started exploring intermediate scales, searching for structure in the activity patterns of large groups of cells. Perhaps, in future, we will uncover a set of universal rules for thought processes that will simplify the task of mind reading. But the state of our current understanding offers no guarantees.

Alternatively, we might exploit the brain’s own intelligence. Perhaps we should think of brain-machine interfaces as tools that we have to master, like learning to drive a car. When people are shown a real-time display of the signal from individual cells in their own brain, they can often learn to increase or decrease that activity through a process called neurofeedback.

Maybe when using the Neuralink, people might be able to learn how to activate their brain cells in the right way to control the interface. However, recent research suggests that the brain may not be as flexible as we once thought and, so far, neurofeedback subjects struggle to produce complex patterns of brain activity that differ from those occurring naturally.

When it comes to influencing, rather than reading, the brain, the challenges are greater still. Electrical stimulation activates many cells around each electrode, as was nicely shown in the Neuralink presentation. But cells with different roles are mixed together, so it is hard to produce a meaningful experience. Stimulating visual areas of the brain may allow blind people to perceive flashes of light, but we are still far from reproducing even simple visual scenes. Optogenetics, which uses light to activate genetically modified brain cells, can be more selective but has yet to be attempted in the human brain.

Whether or not Musk can – or should – achieve his ultimate aims, the resources that he and other tech entrepreneurs are investing in brain-machine interfaces are sure to advance our scientific understanding. I hope that Musk shares his wireless implant with the many scientists who are also trying to unravel the mysteries of the brain.

That said, decades of research have shown that the brain does not yield its secrets easily and is likely to resist our attempts at mind hacking for some decades yet.

- is Professor of Neural Interfaces, Newcastle University

- This article first appeared on The Conversation