Talk about bad timing. Like Guerilla Games tip-toeing around Breath of the Wild in 2017 (and later, Elden Ring), Google just held its annual I/O developer conference where it put a heavy significance on artificial intelligence. You know, just a day after OpenAI, which is considered to be the leader in the industry, brought GPT-4o to life. Coincidence? We think not.

Still, this is Google we’re talking about. It’s pretty difficult to knock the steam out of an event like I/O, and rightfully so. Sundar Pichai, CEO of Google, took to the stage to unveil a flurry of new AI-powered features, heavily focusing on software like Google Gemini, and features headed Android’s way.

Back in a Flash

Meet Gemini 1.5 Flash, Google’s latest AI model to join the Gemini family and “the fastest Gemini model served in the API.” Flash is a multimodal model that packs the same power as Gemini 1.5 Pro, though it’s optimized for “high-volume, high-frequency tasks at scale,” thanks to the latter distilling its knowledge down to a more efficient model.

Specifically, Flash excels at summarization, chat applications, image and video captioning, data extraction from long documents, tables, “and more,” all while being quicker at the job. Google also made some changes to 1.5 Pro, doubling the model’s context window from one million up to two million, while also making some improvements to the model’s reasoning and translating abilities.

“We added audio understanding in the Gemini API and Google AI Studio, so 1.5 Pro can now reason across image and audio for videos uploaded in Google AI Studio. And we’re now integrating 1.5 Pro into Google products, including Gemini Advanced and in Workspace apps.”

Both Flash and 1.5 Pro are available to the public via Google AI Studio and Vertex AI in a public preview, though with a one million token context. If you’re interested in 1.5 Pro’s doubled context window, you’ll need to join the waitlist of developers using the API and/or Google Cloud customers.

Project Astra is the future of AI assistance, apparently

Talk about timing again, huh? It turns out that outsourcing artificial intelligence to a third-party device like the Humane AI Pin or Rabbit R1 wasn’t the greatest idea. As it happens, we already have the best device for AI: smartphones. That’s the idea with Google’s new Project Astra, something it describes as the future of artificially intelligent assistants.

Google’s thinking is that an assistant can only be useful if it’s interacting with the world in the same way humans do. In other words, an AI assistant that can see, hear and truly understand context, all while responding in a time that makes you forget it’s a robot. Google’s got the multimodal part locked down. It’s making it sound natural that’s had Google on the back foot.

Google showed off Astra’s skills in a two-minute-long uninterrupted demonstration seen above. We get a basic understanding of how it all works, with Astra being able to solve problems on a whiteboard, remind its users where it last saw their glasses, and even come up with a band name on a whim. If that at all sounds familiar, it’s the same idea OpenAI’s GPT-4o is cooking up, but with Google’s far-reaching ecosystem in tow.

The technology is impressive, sure, but we envision Astra getting its time in the spotlight only when products like Meta’s Ray-Ban smart glasses inevitably become more accessible to the layman. Until then, we’ll have to make do with Astra’s involvement in the Gemini app and web experience later this year.

Retro-fitting AI on Android

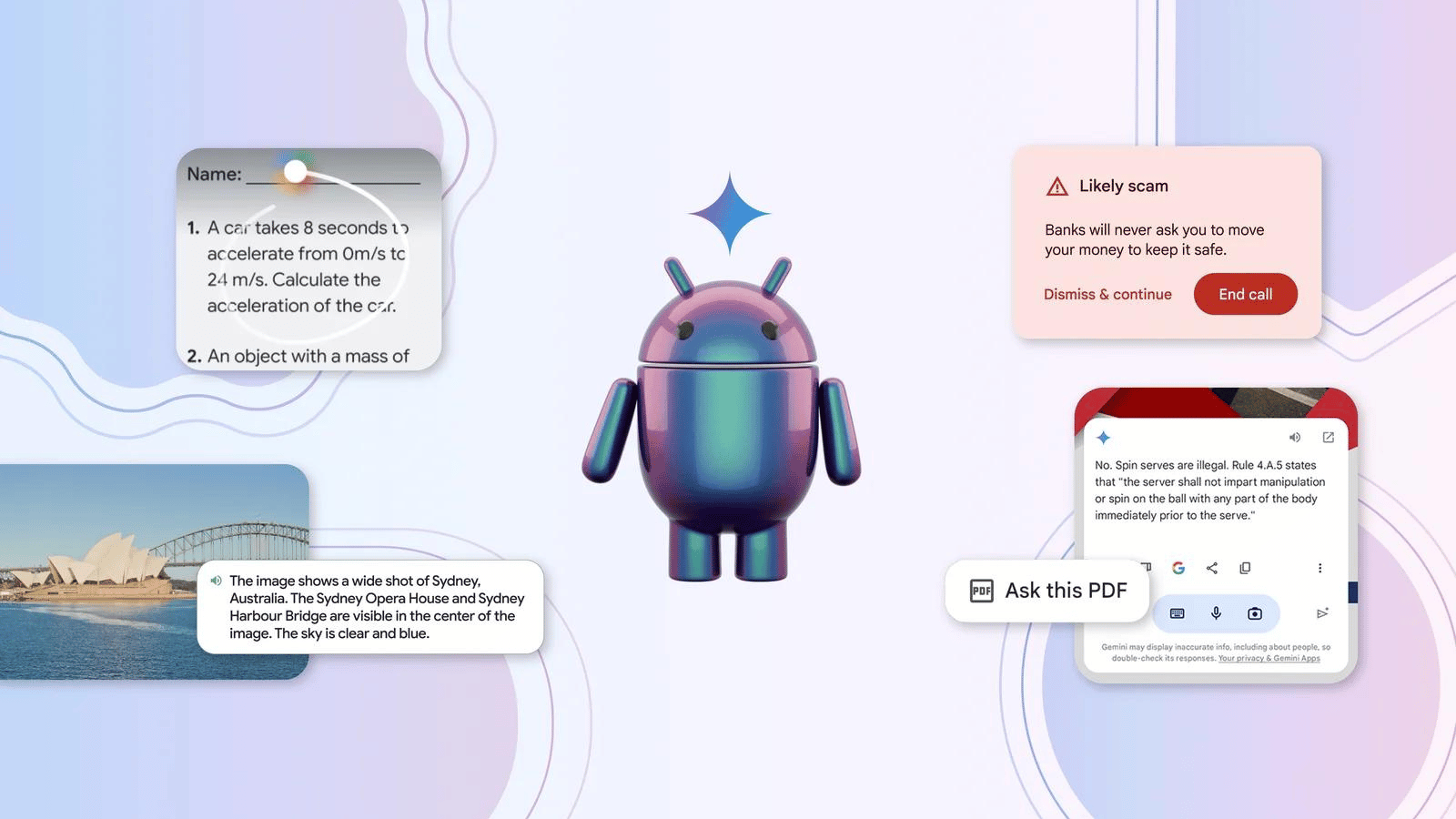

Wondering where Android fits into all this? Google announced a couple of minor additions and changes to the AI features through the operating system, such as smartening up Circle to Search before rolling it out to a whole lot more Android devices. Google didn’t dive into details of when that might be happening or which devices are getting the Circle to Search treatment.

We do know that it’s capable of solving math equations now, because why not? Rather than solve the problem outright, it’ll help the user reach the end goal by breaking it down into steps. This was done to assuage any parents or teachers worried about the advancement of AI. It’s worth mentioning that if your kid couldn’t simply whip put Photomath, then they’re not worth the hassle anyway.

TalkBack is also being kitted out with Gemini Nano’s multimodal abilities, to better provide those blind or vision-impaired users with a clearer and more fitting description of what’s happening on-screen.

Finally, there’s the new live scam detection feature, that does, well, exactly what it says on the tin. With Gemini Nano listening in on your calls (locally, nothing is sent to the cloud), it’s able to keep an ear out for any language that’s most often used during scam calls. For example, a “bank representative” asking for funds or a gift card would throw up a warning from Nano, and advise you to end the call.

Google will share more about the development of these features later this year.