Many people think of privacy as a modern invention, an anomaly made possible by the rise of urbanization. If that were the case, then acquiescing to the current erosion of privacy might not be particularly alarming.

As calls for Congress to protect privacy increase, it’s important to understand its nature. In a policy brief in Science, we and our colleague Jeff Hancock suggest that understanding the nature of privacy calls for a better understanding of its origins.

Research evidence refutes the notion that privacy is a recent invention. While privacy rights or values may be modern notions, examples of privacy norms and privacy-seeking behaviors abound across cultures throughout human history and across geography.

As privacy researchers who study information systems and behavioral research and public policy, we believe that accounting for the potential evolutionary roots of privacy concerns can help explain why people struggle with privacy today. It may also help inform the development of technologies and policies that can better align the digital world with the human sense of privacy.

The misty origins of privacy

Humans have sought and attempted to manage privacy since the dawn of civilization. People from ancient Greece to ancient China were concerned with the boundaries of public and private life. The male head of the household, or pater familias, in ancient Roman families would have his slaves move their cots to some remote corner of the house when he wanted to spend the evening alone.

Attention to privacy is also found in preindustrial societies. For example, the Mehinacu tribe in South America lived in communal accommodations but built private houses miles away for members to achieve some seclusion.

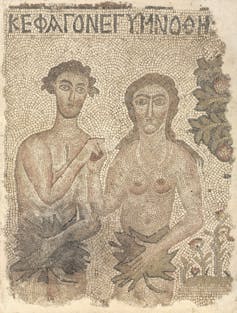

Evidence of a drive toward privacy can even be found in the holy texts of ancient monotheistic religions: the Quran’s instructions against spying on one another, the Talmud’s advice not to place windows overlooking neighbors’ windows, and the biblical story of Adam and Eve covering their nakedness after eating the forbidden fruit.

The drive for privacy appears to be simultaneously culturally specific and culturally universal. Norms and behaviors change across peoples and times, but all cultures seem to manifest a drive for it. Scholars in the past century who studied the history of privacy provide an explanation for this: Privacy concerns may have evolutionary roots.

By this account, the need for privacy evolved from physical needs for protection, security and self-interest. The ability to sense the presence of others and choose exposure or seclusion provides an evolutionary advantage: a “sense” of privacy.

Humans’ sense of privacy helps them regulate the boundaries of public and private with efficient, instinctual mastery. You notice when a stranger is walking too close behind you. You typically abandon the topic of conversation when a distant acquaintance approaches while you are engaged in an intimate discussion with a friend.

Privacy blind spots

An evolutionary theory of privacy helps explain the hurdles people face in protecting personal information online, even when they claim to care about privacy. Human senses and the new digital reality are mismatched. Online, our senses fail us. You do not see Facebook tracking your activity in order to profile and influence you. You do not hear law enforcement taking your picture to identify you.

Humans might have evolved to use their senses to alert them to privacy risks, but those same senses put humans at a disadvantage when they try to identify privacy risks in the online world. Online sensory cues are lacking, and worse, dark patterns – malicious website design elements – trick those senses into perceiving a risky situation as safe.

This may explain why privacy notice and consent mechanisms – so popular with tech companies and for a long time among policymakers – fail to address the problem of privacy. They place the burden for understanding privacy risks on consumers, with notices and settings that are often ineffectual or gamed by platforms and tech companies.

These mechanisms fail because people react to privacy invasions viscerally, using their senses more than their cognition.

Protecting privacy in the digital age

An evolutionary account of privacy shows that if society is determined to protect people’s ability to manage the boundaries of public and private in the modern age, privacy protection needs to be embedded in the very fabric of digital systems. When the evolving technology of cars made them so fast that drivers’ reaction times became unreliable tools for avoiding accidents and collisions, policymakers stepped in to drive technological responses such as seat belts and, later, airbags.

Ensuring online privacy also requires a coordinated combination of technology and policy interventions. Baseline safeguards of data protection, such as those in the Organization for Economic Cooperation and Development’s Guidelines on the Protection of Privacy and Transborder Flows of Personal Data, can be achieved with the right technologies.

Examples include data analysis techniques that preserve anonymity, such as the ones enabled by differential privacy, privacy enhancing technologies such as user-friendly encrypted email services and anonymous browsing, and personalized intelligent privacy assistants, which learn users’ privacy preferences.

These technologies have the potential to preserve privacy without hurting modern society’s reliance on collecting and analyzing data. And since the incentives of industry players to exploit the data economy are unlikely to disappear, we believe that regulatory interventions that support the development and deployment of these technologies will be necessary.